Bedlam

A telematic/ telerobotic installation project

By Simon Penny and Bill Vorn

Funded by Langlois Foundation for Science and Art 2001

Overview

Bedlam explores the dislocation and permutation of subjectivity by computation and telematics. Bedlam is a telematic and teleoperative art installation comprising telerobotics, multicamera machine vision, spatialised interactive sound, video, wide bandwidth multimodal networking and web. Unlike most network experiments, Bedlam links, not just computers and virtual environments, but real spatial locations containing physically active people. This commitment to embodiment is a critical experimental intervention in the development of wide bandwidth multimodal networking.

Unlike projects which utilise preexisting media genres and technological channels, in the case of Bedlam, we see the separation of 'content' and 'technology' as artificial. The embodied experience of the users, interacting remotely via a heterogenous and non-standard array of computer mediation is the content and the experience.

Bedlam is an interdisciplinary project which models a novel cultural environment from a complex of emerging technologies including pneumatics and robotics, digital video systems, digital sound and network communication. Bedlam is equal parts play, critique, creative and technological R+D. It offers a critique of academic and popular discourses of cybernetics, artificial intelligence, robotics, 'virtual reality' and 'artificial life'. It also constitutes experimental research in human computer interaction. Bedlam proposes a model of telematic interaction which actively critiques paradigms of computer-human interaction and of VR. We emphasize full-body interaction in which the user, unencumbered by hardware, training or highly symbolic interaction protocols, can drive remote and local systems by the ongoing behavior of their entire body.

Description

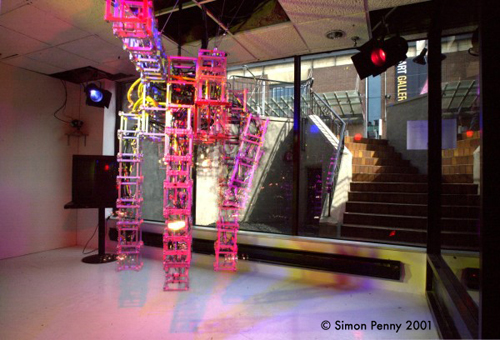

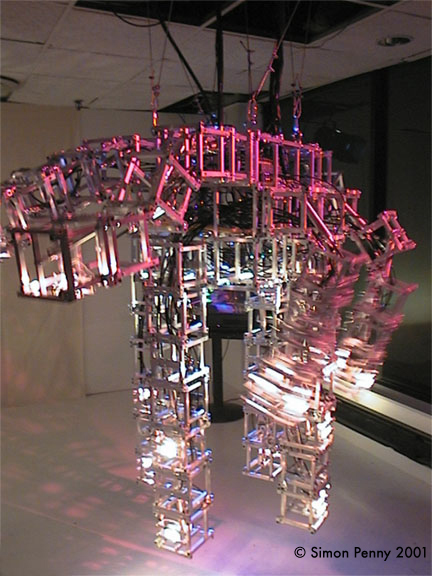

At each of two sites, a participant moves within an interaction stage facing a coordinated array of hissing and clanking telerobotic prosthetics actuated by 'pneumatic muscles', driven by data from the remote user’s digitized 3D image. Video imagery mixed from the vision system cameras and other video inputs is displayed on large screens flanking the robotic installations at each site.

At 'siteA' the user stands within an 'interaction stage', a roughly 10' open walled cube. Beside this interaction stage is a structure of custom robotic devices. Audiences at both sites view the action from behind and beside the interaction stage.

As the users move, they generate real time 8 channel spatialized sound tightly coupled to their movement and gesture. Data about the users movement is passed to the remote 'site B'. This data actuates the robotic devices at 'site B'. The robotic devices are vaguely anthropomorphic, that is they may be reminiscent of animal or human body parts, but they are not assembled in the form of a body. The dynamics of their behavior however reflects the dynamics of the users behavior. The user at site B moves in response to the behavior of the robotic devices and creates local spatialized sound and data about his/her movement is passed back to site A, actuating the robotic devices there. In this way a highly mediated gestural communication loop is formed.

In an alternative interaction scheme, user at siteA influences or perturbs the behavior of robots at siteA. This robot behavior is passed to robots at site B, and vice versa. In this version, the robots are in a constant feedback loop of communication, and that system is perturbed by human users at both ends.

Unlike most interactive systems, our custom multi-camera machine vision system allows for radically active behavior without any hardware or tethers. Real time spatialized sound in each 'interaction stage' is generated by the real time 3D model of the user built by the vision system.

'Sound agents' also share the same virtual space as the user's body model and behave sometimes in a completely autonomous manner sometimes in direct response to the viewer's actions, as their coordinates in the virtual space are mapped onto 3D sound positioning in the real space.

The general effect (for each participant and for on-site audiences) is of a space of partial and quasi- identities in flux, which nonetheless carries strong suggestions of a communicative loop between the two users, mediated by network, robotic and media elements.

Finally, the project also has a web interface that allows observation by remote users, whose participation influences aspects of the events occurring at each site, and at a virtual site built from the data flow between the real sites. Internet users can also interact at the 'sound agent' level by creating new instances or modifying certain parameters of these agents.

BEDLAM VISION, BEDLAM ROBOTS

Realisation of the project entails development of gestural multi-jointed pneumatic robots. This entails the development of a custom quasi-proportional pneumatic actuator system and local network of micorcontrollers (one for each joint, with closed loop feedback from joint encoders. These are in turn linke to a host computer which interprets data from the remote machine vision system as robot control instructions.

Bedlam was a multi-year collaborative machine vision and robotics telematic art project (2001-2004) which received development funding from the Daniel Langlois Foundation for Science and Art. Bedlam was a collaboration between myself (Simon Penny) and Bill Vorn/Yves Bilodeau of Concordia University and Hexagram Research Center, Montreal Canada, with Martin Peach and Jeff Ridenour providing technical assistance.

The project consisted of several exhibitions of parts of the system which was planned to culminate in a live telerobotic telematic link between the Beall Center for Art and Technology, Irvine CA and the Hexagram Institute, Montreal Quebec. In the culminating project, each site was to have a telematically driven robotic sculpture with multiple degrees of freedom, a user stage with the Traces multicamera 3D machine vision system, and 8 channel spatialised interactive sound.

Voices of Bedlam (Beall Center, UCI, 2002).

A volumetric multi-camera machine-vision driven, spatialised interactive sound installation It was an installation of the 8 channel interactive spatialised audio, using the Traces Vision System, custom body segmentation code and custom MAX audio programming.

The image of the user is captured by four video cameras mounted in the upper corners of a cubic room of about 8x8x8ft . The video data is digitised and the image of the user is distinguished from the background. These user silhouettes are then applied to build a low-resolution real-time volumetric model of the user, in a virtual space analogous in scale to the interaction room. The resulting body model is displayed on the color monitor. From this body model, points corresponding to the hands, feet, head and center of mass are deduced (indicated by colored spots on the body model). These procedures are done using custom code running on a custom Linux PC. The values for position and velocity of these points are sent to a G4 mac running Max (an object oriented sound programming environment) where they are applied to a custom sound generation program in which the variables of the body points control variables in several sound treatment processes. The result is the interactive generation of real time eight channel spatialised sound.

Voices of Bedlam was scheduled for exhibition at the Serralves Museum, Oporto, Portugal for the European Cultural Capital celebrations, September 2001. This exhibition had to be cancelled due to international terrorism.

Bedlam Telekinesis. 'Signal' exhibition, Deconsim, Toronto, and Subtle Technologies conference, 2003.

In Bedlam Telekinesis the Traces Vision system was installed an a room next to a large shopfront in which a large pneumatic robot was installed. The behavior of a user in the TVS room drove the robot in real time.

From the exhibition proposal:

The Bedlam project is a public, two-site telematic / telerobotic installation. Funded by the Langlois Foundation, it is a collaboration between Bill Vorn (Concordia, Montreal) and Simon Penny (UCI).

Bedlam is an exploration of the fracturing, permutation and recombination of identities by computation and telematics, a context sometimes referred to as Mixed Reality or Augmented Reality. Bedlam is a telematic and teleoperative art installation comprising telerobotics, machine vision, interactive sound and video, with user interaction at each physical site and via the web. Bedlam has two primary physical sites, more or less identical, and a common virtual site. Online users access the virtual site and video feeds from the phyiscal sites.

Bedlam is an interdisciplinary project which models a novel cultural environment from a complex of technologies including pneumatics and robotics, digital video systems, digital sound and network communication. Bedlam is equal parts play, critique and technological R+D. It offers a critique of academic and popular discourses of cybernetics, artificial intelligence, robotics, 'virtual reality' and 'artificial life'. It also constitutes experimental research in human computer interaction. Bedlam proposes a model of telematic interaction which actively critiques paradigms of computer-human interaction and of VR. We emphasize full-body interaction in which the user, unencumbered by hardware, training or highly symbolic interaction protocols, can drive remote and local systems by the ongoing behavior of their entire body.

In its final form, it will consist of two sites, each with a machine vision stage, spatialised sound, and array of robotic devices and multiple moving video screens. A participant moves within an interaction stage facing a coordinated array of hissing and clanking tele-robotic prosthetics actuated by 'pneumatic muscles', driven by data from the remote user. Video imagery mixed from the vision system cameras and other video inputs is displayed by two video projectors onto two ragged moving drapes flanking the robotic installations at each site.

Vision system data will be sent over the network to drive remote pneumatically actuated robotic sculptures at remote site which enact the dynamics of the users' movements. A user at remote site will move in response to the robot behaviors, generating local sound and driving robots at local site. The result will be a higly mediated telematic communication of gesture and bodily dynamics.

The Bedlam project includes multiple technical development projects in multi-camera volumetric machine vision, interactive eight channel spatialised audio, custom pneumatic robotic sculptures with custom control systems, webprogramming and low latency, multi-modal networking.

The robotic devices will be vaguely anthropomorphic, that is they may be reminiscent of animal or human body parts, but they will not be assembled in the form of a body. The dynamics of their behavior will however reflect the dynamics of the users behavior. The users will move in response to the behavior of the robotic devices and will create local spatialized sound and data about his/her movement will be passed to the other site, actuating the robotic devices there. In this way a highly mediated gestural communication loop is formed. Audiences at both sites will view the action from behind and beside the interaction stage.

Unlike most interactive systems, our custom multi-camera machine vision system allows for radically active behavior without any hardware or tethers. (Please refer to http://www.art.cfa.cmu.edu/Penny/works/traces/Tracescode.html and http://www.art.cfa.cmu.edu/Penny/texts/traces/) Real time spatialized sound in each ‘interaction stage’ is generated by the real time 3D model of the user built by the vision system. The sound has its basis in human vocal noises (yelps, moans, muttering and phatic noises) which behave as independent entities or agents 'attached' to various parts of the users body and respond to the users gesture.

Bedlam will also have a web component. Online participants will be able to view action at both sites and will be able to generate autonomous agents which will inhabit the virtual space. Users located at both sites will percieve the agents as graphically rendered in the virtual space, and represented as spatialised audio.

The general effect (for each participant and for on-site audiences) is of a space of partial and quasi- identities in flux, which nonetheless carries strong suggestions of a communicative loop between the two users, mediated by network, robotic and media elements. The web aspect allows observation by remote users, whose participation influences aspects of the events occurring at each site, and at a virtual site build from the data flow between the sites.

Bedlam: un lieu où régne la confusion de l’identité.

EART studios, Concordia University and Teluq, Montreal, November 2003.

This iteration of Bedlam established connection between TVS user stage and the robot located in different parts of Montreal.